Martin on .Net

My hints and tips for cleaner, better code!

Sunday, 16 February 2020

Martin on .Net is changing to https://codifice.uk/

Martin

Thursday, 22 November 2012

Notes on Configuring a New EPiServer 6 R2 Website for Continuous Integration

I’m assuming that you’ve read the installation instructions:

Creating a new blank EPiServer Site Project and Solution

- Prepare Repository

- Check out trunk/main branch to working copy

- Create an Empty VS Solution root of the working copy

- Download the 6R2 installer from EPiServer World (you’ll need to register):

- http://world.episerver.com/Download/Categories/Products/EPiServer-CMS/

- Run installer and deploy a Core deployment to the Working Copy, creating a project folder

- Open VS solution

- Add New Empty Website Project (use a temporary name)

- Configure project properties as required:

- Assembly Name (<client>.WebSite)

- Assembly Info (Version, Name, Copyright, etc)

- Namespace (<client>.WebSite)

- Target Framework (.Net 3.5)

- Treat Warnings as Errors (All Configurations)

- Strong Naming Key

- Code Analysis Rules

- Save and Remove the Temporary Website Project from the Solution

- Move project file into EPiServer Website folder and rename to match

- Edit the file in notepad to replace all instances of the Temporary Project Name with the real one

- Also copy

- web.*.config (not web.config)

- the Properties folder

- Any supporting files you added (Strong naming key, Code Analysis rules, etc)

- Add the moved Project file to the solution as an Existing Project

- Enable ‘Show All Files’ and include all

- Edit episerver.config to configure:

- Start Page Id – set startPageId attribute to ‘1’ (from ‘0’) (/sites/site/siteSettings[startPageId])

- Site Description attribute

- Site Url - the siteUrl attribute (/sites/site/siteSettings[siteUrl])

- Edit EPiServerFramework.config to ensure that the correct siteHosts are set

- Test the project builds as expected and website is working (place holder page is displayed)

- place holder page is displayed

- Commit the solution to version control, ensuring that only code and configuration files are committed not build outputs or per user settings (/bin, /obj, *.user files etc).

Managing Dependencies for CI

The project builds successfully as all of the required dependencies exist within the bin folder of the website. This means the the build server will not be able to successfully build the project. We can manage this in two ways:

- (Recommended) Move all of the DLL’s from the bin folder into a ‘3rd Party’ or ‘libs’ folder and create references to all of the top level assemblies from there, ensuring the ‘Copy Local’ is enabled.

- For each additional EPiServer installed to the website this procedure will need to be repeated

- Manage the dependencies via the EPiServer NuGet service (required NuGet VS extension) (not recommended as the feed is outdated compared to the download from EPiServer World) Admission: I couldn’t get this to work, so I might be a bitter!

From here it should be build scripts as usual!

Here Be Dragons – Configuring EPiServer Dependencies using NuGet

I couldn’t get this to work as intended – in the hope that I’ve done something stupid and easiliy correctable….

Managing dependencies via NuGet is the new standard for managing dependencies and has many advantages over manually maintaining references. For CI builds, not storing binary files within version control is a big win.

Unfortunately, the EPiServer NuGet feed doesn’t take advantage of all NuGet offers and is already a little out of date. However, if you want to attempt to get a core EPiServer site running using NuGet dependencies:

- Enable NuGet Package Restore on the Solution (from Project Menu, or Right Click on Solution)

- Add the EPiServer NuGet feed (http://nuget.episerver.com/feed/packages.svc/) to the Package Manager Sources

- Install the required packages as references to the EPiServer Website project via the Package Manager Console (Ensuring the EPiServer package source is selected)

- Install-Package EPiServer.CMS.Core -Version 6.1.379.0

- Install-Package EPiServer.Framework -Version 6.2.267.1

- The balance of the dependencies will need to be references from the 3rd Party folder.

- At this point the project wouldn’t display as a website will a null reference exception (no stack trace)

Sunday, 15 January 2012

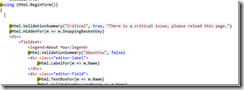

Announcing MVC3 Validation Groups

As this work involved a bit of the old copy-paste-adapt from the standard MVC code, it’s a bit complicated to go into the fine details of how to replicate the functionality for yourself. So I’ve setup a codeplex project with a reusable library all ready to go! (Of course all the source code is up there as well!)

How to use

- Download the latest release. (Get the latest Example Site as well to see a preconfigured MVC3 Website and example integration)

- Add a reference to ‘McjDevelopment.Mvc3ValidationGroups.dll’ to your MVC3 project

- Copy ‘jquery.validate.unobtrusive.validationgroups.js’ to your ‘scripts’ folder

- Decorate your view models with the [ValidatorGroup] attribute specifying the validation groups that that field belongs to (space delimited)

- Update your view to use ‘jquery.validate.unobtrusive.validationgroups.js’ immediately after ‘jquery.validate.unobtrusive.js’ (or ‘jquery.validate.unobtrusive.min.js’)

- Update your view to include where you want each groups Validation Summary to appear

- Amend your ‘Action’ buttons and links to include ‘data-val-valgroup-name’ attribute and specify which validation groups should be triggered by the user clicking on that element

- Amend you’re controller to check the appropriate validation groups when called. (this implementation shows a common action being called with uses the clicked button name to select the validation group, an alternative is to us JS to dynamically alter the form’s action location)

- Test validation groups!

Tuesday, 1 November 2011

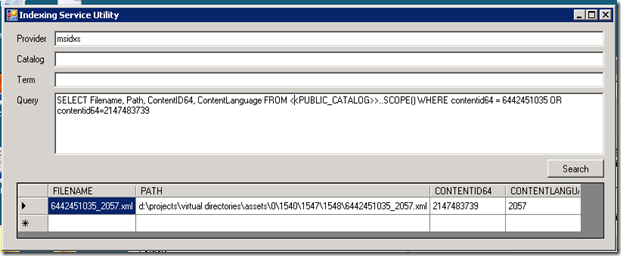

Ektron: Finding “Missing” Content in the Index on 64bit Windows

When content is created on an Ektron with a large content id (commonly on eSync enabled workareas) it may corrupt the content id.

For example if a new content item has an id of 6442451035 it will actually be indexed with a contentid of 2147483739. This is because the original id is larger than an unsigned integer can hold.

1: long contentId = 6442451035;

2: Console.WriteLine((uint)contentId); //2147483739

You can then query your public index to see if content has been misindexed by running a query to find your content

SELECT Filename, Path, ContentID64, ContentLanguage FROM <<PUBLIC_CATALOG>>..SCOPE() WHERE contentid64=6442451035 OR contentid64=2147483739

If the content is not indexed at all, you won’t find any content and the issue is something else. However, if you get a result but the CONTENTID64 value is wrong, then you should be able to fix the problem.

To fix this you can follow the following procedure:

- Download Updated QLXFilters (and check that they are newer than your current dlls!)

- Backup QLXFilter.dll from c:\windows\system32 and c:\windows\sysWoW64 on the server

- Stop the Indexing Service

- Replace the dlls with the updated versions

- Start the Indexing Service

- Run a full SearchConfigUI, if you have assets missing from the current server please untick Documents from the advanced tab.

- Select Re-index existing Catalogues - this may affect the stability of you website.

- After this has completed please check the Docs to Index on the indexing service and ensure that this has finished indexing.

- After this is complete please run your query and you should now see the results return correctly.

Tuesday, 16 August 2011

Persisting the Page Title across Update Panel Post backs

There’s a fun bug with AsyncPostbacks blanking out the Page Title (http://stackoverflow.com/questions/627378/page-losing-title-after-updatepanel-asyncpostback), the recommended fix is to rebind the page title on each Async Postback or to use the declarative page title attribute in the aspx. My fix is to fix a JS bug in JS:

1: $(function(){

2: var prm = Sys.WebForms.PageRequestManager.getInstance();

3: if (!(prm)) return;

4: document.orginalTitle=document.title;

5: instance.add_endRequest(function(s, e){

6: if (document.title.replace(/\s/g,"").length==0)

7: document.title=document.orginalTitle;

8: });

9: });

This JS code will ensure that the page title is persisted across ajax requests, with no changes to the server side code. If you don’t have JQuery available (for shame!) then just move the function body into a code block which will execute on the GET request/first view of the page.

Thursday, 4 August 2011

Ektron: Treating Office Files as Normal DMS Assets

One of the features of Ektron is tight office integration allowing the editing of office files directly from the workarea. However, in some circumstances you may want to treat these as normal DMS assets (like PDF files) as their the end results of an offline publishing process.

The Ektron support fix for this is to modify the workarea files (detailed below), which would then need to be re-applyed with every upgrade. This is easy enough to do (if you remember to do it with each upgrade), but I would prefer a more unobtrusive fix which is applied via configuration.

The Manual Fix (Ektron Supported)

Edit /workarea/edit.aspx and comment out the following lines (~line 2110):

1: var isOffice = document.getElementById("isOfficeDoc");

2:

3: if ((isOffice != null) && (isOffice.value == "true") && (ShowMultipleUpload() || !IsBrowserIE()))

4: {

5: g_initialPaneToShow = 'dvSummary';

6: var contentTabHeader = document.getElementById("dvContent");

7: var contentTabContent = document.getElementById("_dvContent");

8: if (contentTabHeader != null)

9: contentTabHeader.style.display="none";

10: if (contentTabContent != null)

11: contentTabContent.style.display="none";

12: }

Also, comment out the following lines of /workarea/edit.aspx.vb (~line 1945)

1: If (isOfficeDoc.Value = "true") Then

2: phContent.Visible = False

3: phEditContent.Visible = False

4: End If

The workarea will now treat office files in the same manner as PDF DMS Assets.

The Unobtrusive Fix (Unsupported)

Looking at what the official fix achieves does, it all hangs on the value of the “isOffice” hidden field being ‘true’. Realising this it’s an (almost) trivial task to create an HttpModule to manipulate the control tree of the edit page to get the same effect. The major advantage of this is that it won’t require modification of Ektron files and can be disabled by simply unregistering the module within the web.config.

1: using System;

2: using System.Reflection;

3: using System.Web;

4: using System.Web.UI;

5: using System.Web.UI.HtmlControls;

6:

7: namespace MartinOnDotNet.Support

8: {

9: /// <summary>

10: /// Force OfficeDocuments to be treated as normal DMS Assets

11: /// </summary>

12: public class OfficeDocumentFixModule : IHttpModule

13: {

14:

15: /// <summary>

16: /// Initializes a module and prepares it to handle requests.

17: /// </summary>

18: /// <param name="context">An <see cref="T:System.Web.HttpApplication"/> that provides access to the methods, properties, and events common to all application objects within an ASP.NET application</param>

19: public void Init(HttpApplication context)

20: {

21: if (context == null) throw new ArgumentNullException("context");

22: context.PreRequestHandlerExecute += new EventHandler(OnPreRequestHandlerExecute);

23: }

24:

25: private void OnPreRequestHandlerExecute(object sender, EventArgs e)

26: {

27: HttpContext current = HttpContext.Current;

28: if (current == null

29: || current.Handler == null

30: || current.Request == null

31: || !current.Request.Url.AbsolutePath.EndsWith("/workarea/edit.aspx", StringComparison.OrdinalIgnoreCase)) return;

32:

33: Page page = current.Handler as Page;

34: page.PreInit += new EventHandler(OnPreInit);

35:

36: }

37:

38: /// <summary>

39: /// Always return false regardless of actual value

40: /// </summary>

41: public class AlwaysFalseHiddenField : System.Web.UI.HtmlControls.HtmlInputHidden

42: {

43: /// <summary>

44: /// Gets or sets the value associated with the <see cref="T:System.Web.UI.HtmlControls.HtmlInputControl"/> control.

45: /// </summary>

46: /// <value></value>

47: /// <returns>

48: /// The value associated with the <see cref="T:System.Web.UI.HtmlControls.HtmlInputControl"/>.

49: /// </returns>

50: public override string Value

51: {

52: get

53: {

54: return "false";

55: }

56: set

57: {

58: base.Value = value;

59: }

60: }

61: }

62:

63:

64:

65: /// <summary>

66: /// Called when the Page load event fires

67: /// </summary>

68: /// <param name="sender">The sender.</param>

69: /// <param name="e">The <see cref="System.EventArgs"/> instance containing the event data.</param>

70: private void OnPreInit(object sender, EventArgs e)

71: {

72: Page page = sender as Page;

73: if (page == null) return;

74: PropertyInfo officeDocProperty = page.GetType().GetProperty("isOfficeDoc", BindingFlags.Instance | BindingFlags.NonPublic);

75: if (officeDocProperty == null) return;

76:

77: HtmlInputHidden hil = officeDocProperty.GetValue(page, null) as HtmlInputHidden;

78: AlwaysFalseHiddenField fhil = new AlwaysFalseHiddenField();

79: fhil.ID = hil.ID;

80: fhil.Name = hil.Name;

81: fhil.Value="false";

82: page.PreRenderComplete += (s, ea) =>

83: {

84: hil.Value = "false";

85: };

86: officeDocProperty.SetValue(page, fhil, null);

87:

88: }

89:

90:

91: /// <summary>

92: /// Disposes of the resources (other than memory) used by the module that implements <see cref="T:System.Web.IHttpModule"/>.

93: /// </summary>

94: public void Dispose()

95: {

96: //throw new NotImplementedException();

97: }

98:

99:

100: }

101: }

Simply wire up the module in web.config and job’s a good ‘un.

Thursday, 14 July 2011

Experiments with ADO.Net, Extension Methods and Anonymous Methods

As much as at pains me to admit it, an ORM Framework is not the answer to all data access problems. Sometimes the quickest, easiest and most maintainable approach is back-to-basics flat ADO.Net. For those developers whose (development) life began with Entity Framework, NHibernate or SubSonic. This is a fairly typical example of an ADO.Net method (in a well structured code base):

1: private static IEnumerable<ContentAwaitingApproval> GetPendingApprovals(long approvalGroupId)

2: {

3: List<ContentAwaitingApproval> approvals = new List<ContentAwaitingApproval>();

4: using (SqlConnection conn = new SqlConnection(ConfigurationManager.ConnectionStrings[Properties.Settings.Default.ConnectionStringName].ConnectionString))

5: {

6: using (SqlCommand cmd = conn.CreateCommand())

7: {

8: cmd.CommandText = "SELECT ContentId, LanguageId, Status FROM Approvals WHER ApprovalGroupID=@groupId";

9: cmd.Parameters.AddWithValue("groupId", approvalGroupId);

10: cmd.Connection.Open();

11: using (SqlDataReader dr = cmd.ExecuteReader())

12: {

13: while (dr.Read())

14: {

15: approvals.Add(new ContentAwaitingApproval

16: {

17: ContentId = dr.GetInt64(0)

18: ,

19: LanguageId = dr.GetInt32(1)

20: ,

21: Status = dr.GetString(2)

22: });

23: }

24: }

25: }

26: }

27: return approvals;

28: }

What’s occuring:

- Create a list to contain the results

- Create a new connection object (using a connection string defined in the configuration file)

- Create a SQL Command object

- Set the parameterised query text (if this is a stored procedure then you need to set the CommandType to StoredProcedure)

- Set any parameters the query requires

- Open the connection to the database

- Execute the query

- Read through all the results record by record

- For each record add a new entry to the results list

- Close the reader,command and connection and dispose objects

- Return the results list

So, out of 11 steps only 4 actually related to the functionality of the method. The other 7 are all boiler plate, repeat each time, steps that are required for ADO.Net. If you want to add more detailed exception handling then that’s even more boiler plate code messing up your coding zen. When you look at it like that, it’s easy to start reaching from the ORM toolbox.

But wait, ORM isn’t the only thing that’s happened over the last 10 years! We’ve now got refactoring tools, extension methods and anonymous methods! What can these modern day miracles do for us?

Well, for starters we can tidy up that SqlConnection initializer to get something a bit cleaner and maybe some configuration validation code in place:

1: private static SqlConnection ToConnection(this string connectionStringName)

2: {

3: if (connectionStringName.IsNullOrWhiteSpace()) throw new ArgumentException("Connection String Name cannot be empty", "connectionStringName");

4: ConnectionStringSettings cns = ConfigurationManager.ConnectionStrings[connectionStringName];

5: if (cns == null) throw new ArgumentException("Connection String Name '{0}' cannot be found".ToFormattedString(connectionStringName), "connectionStringName");

6: return new SqlConnection(cns.ConnectionString);

7: }

So replace:

using (SqlConnection conn = new SqlConnection(ConfigurationManager.ConnectionStrings[Properties.Settings.Default.ConnectionStringName].ConnectionString))

With

using (SqlConnection conn = Properties.Settings.Default.ConnectionStringName.ToConnection())

Next, that parameter mapping code can be easily refactored away into a reusable block and add some additional intelligence too:

1: private static void AddParametersToCommand(IDictionary<string, object> parameters, SqlCommand cmd)

2: {

3: if (parameters == null) return;

4: foreach (string key in parameters.Keys)

5: {

6: if (parameters[key] == null)

7: {

8: cmd.Parameters.AddWithValue(key, DBNull.Value);

9: }

10: else if (parameters[key].GetType().IsPrimitive)

11: {

12: cmd.Parameters.AddWithValue(key, parameters[key]);

13: }

14: else

15: {

16: cmd.Parameters.AddWithValue(key, "{0}".ToFormattedString(parameters[key]));

17: }

18:

19: }

20: }

Finally, the per record processing can be made into delegate method, allowing you the opportunity to add additional exception information to the process:

1: Func<SqlDataReader, bool> recordAction = (dr)=>{return true;};

2:

3: using (SqlDataReader dr = cmd.ExecuteReader())

4: {

5: int i = 0;

6: try

7: {

8: while (dr.Read() && recordAction(dr)) i++;

9: }

10: catch (Exception ex)

11: {

12: ex.Data["Record Ordinal"] = i;

13: throw;

14: }

15: }

Combining all this can give us a set of reusable ADO.Net extension methods, which allow us to separate the business concern from the ADO.Net concerns, so the example code a the top of the page can become:

1: private static IEnumerable<ContentAwaitingApproval> GetPendingApprovals(long approvalGroupId)

2: {

3: List<ContentAwaitingApproval> approvals = new List<ContentAwaitingApproval>();

4: "SELECT ContentId, LanguageId, Status FROM Approvals WHER ApprovalGroupID=@groupId"

5: .ProcessRecords(

6: Properties.Settings.Default.ConnectionStringName

7: , new Dictionary<string, object> { { "groupId", approvalGroupId } }

8: , (dr) =>

9: {

10: approvals.Add(new ContentAwaitingApproval

11: {

12: ContentId = dr.GetInt64(0)

13: ,

14: LanguageId = dr.GetInt32(1)

15: ,

16: Status = dr.GetString(2)

17: });

18: return true;

19: }

20: );

21: return approvals;

22: }

Every single line (all 3 if the readability whitespace is removed) are focused on the business side of the query with minimal ADO.Net specific requirements (if you count the connection string name as a ADO.Net specific).

The full (experimental) extension method class is:

1: using System;

2: using System.Configuration;

3: using System.Data.SqlClient;

4: using System.Linq;

5: using System.Collections.Generic;

6: using MartinOnDotNet.TypeConversion;

7:

8: namespace MartinOnDotNet.Data.SqlClient

9: {

10: /// <summary>

11: /// Handy methods for handling SQL

12: /// </summary>

13: public static class SqlClientExtensions

14: {

15: private static SqlConnection ToConnection(this string connectionStringName)

16: {

17: if (connectionStringName.IsNullOrWhiteSpace()) throw new ArgumentException("Connection String Name cannot be empty", "connectionStringName");

18: ConnectionStringSettings cns = ConfigurationManager.ConnectionStrings[connectionStringName];

19: if (cns == null) throw new ArgumentException("Connection String Name '{0}' cannot be found".ToFormattedString(connectionStringName), "connectionStringName");

20: return new SqlConnection(cns.ConnectionString);

21: }

22:

23: public static void Execute(this string commandText, string connectionStringName)

24: {

25: commandText.Execute(connectionStringName, null);

26: }

27:

28: public static void Execute(this string commandText, string connectionStringName, IDictionary<string, object> parameters)

29: {

30: PerformSqlAction<bool>(commandText, connectionStringName, parameters, (cmd) =>

31: {

32: cmd.ExecuteNonQuery();

33: return true;

34: });

35: }

36:

37: public static T ExecuteScalar<T>(this string commandText, string connectionStringName)

38: {

39: return commandText.ExecuteScalar<T>(connectionStringName, null);

40: }

41:

42: public static T ExecuteScalar<T>(this string commandText, string connectionStringName, IDictionary<string, object> parameters)

43: {

44: return PerformSqlAction<T>(commandText, connectionStringName, parameters, (cmd) =>

45: {

46: object value = cmd.ExecuteScalar();

47: if (value == DBNull.Value) return default(T);

48: return (T)value;

49: });

50: }

51:

52: public static void ProcessRecords(this string commandText, string connectionStringName, Func<SqlDataReader, bool> recordAction)

53: {

54: commandText.ProcessRecords(connectionStringName, recordAction);

55: }

56:

57: public static void ProcessRecords(this string commandText, string connectionStringName, IDictionary<string, object> parameters, Func<SqlDataReader, bool> recordAction)

58: {

59: PerformSqlAction<bool>(commandText, connectionStringName, parameters, (cmd) =>

60: {

61: using (SqlDataReader dr = cmd.ExecuteReader())

62: {

63: int i = 0;

64: try

65: {

66: while (dr.Read() && recordAction(dr)) i++;

67: }

68: catch (Exception ex)

69: {

70: ex.Data["Record Ordinal"] = i;

71: throw;

72: }

73: }

74: return true;

75: });

76: }

77:

78: private static void AddParametersToCommand(IDictionary<string, object> parameters, SqlCommand cmd)

79: {

80: if (parameters != null)

81: {

82: foreach (string key in parameters.Keys)

83: {

84: if (parameters[key] == null)

85: {

86: cmd.Parameters.AddWithValue(key, DBNull.Value);

87: }

88: else if (parameters[key].GetType().IsPrimitive)

89: {

90: cmd.Parameters.AddWithValue(key, parameters[key]);

91: }

92: else

93: {

94: cmd.Parameters.AddWithValue(key, "{0}".ToFormattedString(parameters[key]));

95: }

96:

97: }

98: }

99: }

100:

101: public static bool HasResults(this string commandText, string connectionStringName)

102: {

103: return commandText.HasResults(connectionStringName, null);

104: }

105:

106: public static bool HasResults(this string commandText, string connectionStringName, IDictionary<string, object> parameters)

107: {

108: return PerformSqlAction<bool>(commandText, connectionStringName, parameters, (cmd) =>

109: {

110: using (SqlDataReader dr = cmd.ExecuteReader())

111: {

112: return dr.Read();

113: }

114: });

115: }

116:

117: private static T PerformSqlAction<T>(string commandText, string connectionStringName, IDictionary<string, object> parameters, Func<SqlCommand, T> action)

118: {

119: if (commandText.IsNullOrWhiteSpace()) throw new ArgumentException("Command cannot be empty", "commandText");

120: if (action == null) throw new ArgumentNullException("action");

121: try

122: {

123: using (SqlConnection conn = connectionStringName.ToConnection())

124: using (SqlCommand cmd = conn.CreateCommand())

125: {

126: cmd.CommandText = commandText;

127: AddParametersToCommand(parameters, cmd);

128: conn.Open();

129: return action(cmd);

130: }

131: }

132: catch (Exception ex)

133: {

134: ex.Data["Connection String"] = connectionStringName;

135: ex.Data["Command"] = commandText;

136: if (parameters != null)

137: {

138: ex.Data["Parameters"] = parameters.Keys.Select(k => "{0} = '{1}'".ToFormattedString(k, parameters[k])).ToDelimitedString("\n");

139: }

140: throw;

141: }

142: }

143: }

144: }

![image_thumb[1] image_thumb[1]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEhsqEuYnqIlZYARym_grESlxvIsUsRKpxHeekmNm2okEtVDYwRmEpNngTstg75qZwkZhgjUQd0r5ZVMt0IK5ggdG1qb4f5BJn3TYZRoAVpBMLm-Tc43mE7KHicgNIV4adHhJW1iEi90/?imgmax=800)